|

Size: 7709

Comment: WIP

|

Size: 11017

Comment: tidy up the "dodge" methods list

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 3: | Line 3: |

| We've been looking at some of Corosync's internals recently, spurred on by one of our new HA (highly-available) clusters spitting the dummy during testing. What we found isn't a "bug" per se (we're good at finding those), but a case where the correct behaviour isn't entirely clear. We thought the findings were worth sharing, and we hope you find them interesting even if you don't run any clusters yourself. | We've been looking at some of Corosync's internals recently, spurred on by one of our new HA (highly-available) clusters spitting the dummy during testing. What we found isn't a "bug" per se (we're good at finding those), but a case where the correct behaviour isn't entirely clear. We thought the findings were worth sharing, and we hope you find them interesting even if you don't run any clusters yourself. '''Disclaimer: We'd like to emphasise that this is purely just research so far; we have yet to formalise this and perform further testing so we can ask the right questions of the Corosync devs.''' |

| Line 21: | Line 25: |

| Linux HA is split into a number of parts that have changed significant over time. At its simplest, you can consider two major components: * A ''cluster engine'' that handles synchronisation and messaging; this is '''Corosync''' * A ''cluster resource manager'' (CRM) that uses the engine to manage services and ensure they're running where they should be; this is '''Pacemaker''' |

|

| Line 22: | Line 29: |

| ------------ | We're only interested in Corosync, specifically its communication layer. |

| Line 24: | Line 31: |

| '''Stuff above this line is "refined" article material''' | There's two major types of communication in Corosync, the shared cluster data and a "token" which is passed around the ring of cluster nodes (this is a conceptual ring, not a physical network ring). The token is used to manage connectivity and provide synchronisation guarantees necessary for the cluster to operate '''[1] footnote here'''. |

| Line 26: | Line 33: |

| '''Stuff below this line is bullet-point notes that I got MC to help verify''' | The token is always transferred by unicast UDP. Cluster data can be sent either by multicast UDP or unicast UDP - we use multicast. In either case, the source address is always a normal unicast address. |

| Line 28: | Line 35: |

| ------ | Given this, a 4-node cluster looks something like this: |

| Line 30: | Line 37: |

| = How communication works = | {{attachment:corosync_communication.png}} |

| Line 32: | Line 39: |

| * Corosync can communicate using udp multicast or udp unicast. We use multicast | One convenient feature in Corosync is its automatic selection of source address. This is done by comparing the `bindnetaddr` config directive against all IP addresses on the system and finding a suitable match. The cool thing about this is that you can use the exact same config file for all nodes in your cluster, and everything should Just Work™. |

| Line 34: | Line 41: |

| * Data is sent into the cluster with a regular unicast address as the source, to a multicast group as the destination | Automatic source-address selection is always used for IPv4, it's not negotiable. It's never done for IPv6, addresses are used exactly as supplied to `bindnetaddr`. |

| Line 36: | Line 43: |

| * Data sent in multicast packets is enqueued on each node when it's received | Interestingly, you only supply an address to `bindnetaddr`, such as 192.168.0.42 - CIDR notiation is not used, as might be commonly expected when referring to a subnet. Instead, Corosync compares each of the system's addresses (plus the associated netmask) against `bindnetaddr`, applying the same netmask. This diagram demonstrates a typical setup: |

| Line 38: | Line 45: |

| * '''In addition,''' a token is also passed around the cluster in a ring. The token is passed by pure udp unicast, using the same unicast source address as previously mentioned | {{attachment:bindnetaddr_masking.png}} |

| Line 40: | Line 47: |

| * When a node receives the token, it processes the multicast data that has queued-up, modifies the token a bit to note that, then passes the token to the next node | While somewhat contrived, we can see how the local address is determined as intended, compared using each interface's own netmask. |

| Line 45: | Line 52: |

| * Corosync takes the `bindnetaddr` config parameter | The key here is in two parts. Firstly, it's possible for a floating pacemaker-managed IP to match against your `bindnetaddr` specification. As an example: * 192.168.0.1/24 - static IP used for cluster traffic * 192.168.0.42/24 - a floating "service IP" used for an HA daemon |

| Line 47: | Line 56: |

| * For IPv4 it tries to automatically find a match against configured IP addresses * This behaviour is not configurable * This is a feature - it means you can use the exact same config file on all nodes in the cluster * When using IPv6, no such automatic selection is made |

Secondly, Corosync sometimes re-enumerates network addresses '''[2] footnote here''' on the host for automatic source address selection. We're not 100% sure of the circumstances under which this occurs, but a classic example would be while performing a rolling upgrade of the cluster software. A normal process would be to unmanage all your resources, stop Pacemaker and Corosync, upgrade, then bring them back up again and remanage your resources. |

| Line 52: | Line 58: |

| * Corosync enumerates the system's IPs and tries to find a match for the `bindnetaddr` specification | Taken together, this can lead to the cluster getting very confused when one host's unicast address for cluster traffic suddenly changes. Consider a 2-node cluster comprising nodes A and B: |

| Line 54: | Line 60: |

| * It does this by taking the netmask of the address, masking the spec, and seeing if IP+mask == spec+mask | 1. A's address changes 1. The token drops as a result of the ring breaking 1. B thinks A if offline, as A's new address isn't allowed through our outbound firewall rules, so B doesn't receive anything from A 1. A also thinks B is offline, because B can't hear A 1. In the meantime, A will "discover" a new cluster node: itself, operating on the new address! |

| Line 56: | Line 66: |

| * It's possible for a floating pacemaker-managed IP to match/overlap your `bindnetaddr` IP, eg: * 192.168.0.1/24 - static IP used for cluster traffic * 192.168.0.42/24 - a floating "service IP" used for an HA service |

This state is passed on to Pacemaker, which attempts to juggle the resources to satisfy its policy. For resources that were already running on A, it now sees duplicate copies, which isn't allowed. Original-A asks for new-A to be STONITH'd. Meanwhile, B is just trying to get all the resources started again. |

| Line 60: | Line 68: |

| * If Corosync re-enumerates the IPs sometime after startup (could happen any time, as far as we're concerned), it can find the "new" IP (the floating IP) and select that as the new local address for cluster communications * The enumeration happens here: https://github.com/corosync/corosync/blob/master/exec/totemip.c#L342 * MC notes {{{ michael: a good case where corosync will re-enumerate your IPs is simply when you unmanage everything, bring pacemaker and corosync down, then bring them back up again michael: as happens when we're upgrading these pieces of software, for instance }}} |

This is decidedly not ideal. What really got us is that we hadn't seen this behaviour in any previously deployed cluster; something was different. |

| Line 67: | Line 70: |

| * Suddenly pacemaker sees a third node in the cluster | Normally we'll dedicate a separate subnet to cluster traffic, keeping it away from "production traffic" like load-balanced MySQL or HTTP. This time we didn't, opting to reuse the subnet. We did this because we don't like "polluting" a network segment with multiple IP subnets. We could have setup another VLAN to confine the subnet (avoiding pollution), but it would've meant putting that into our switches just for two physical machines, which seemed like overkill. |

| Line 69: | Line 72: |

| * Corosync also thinks that the cluster has been partitioned, as the old address (192.168.0.1 in our example) has suddenly disappeared * The fact that firewall rules will be dropping any traffic from the now-in-use floating IP will also cause trouble |

Reusing the existing subnet for cluster traffic clearly had something to do with our problem, so it was time to go digging in Corosync to see what it does. |

| Line 74: | Line 75: |

| = Why Corosync can select a different address = | = How Corosync can select a different address = |

| Line 76: | Line 77: |

| * `totemip_getifaddrs()` gets all the addresses from the kernel and puts them in a linked list, you can think of them as tuples of `(name,IP)` | In the [[https://github.com/corosync/corosync/blob/master/exec/totemip.c#L342| Corosync source]] there's a function called `totemip_getifaddrs()`, it gets all the addresses from the kernel and puts them in a linked list. For simplicity, you can think of them as tuples of `(name,IP)`. The name will be based on the device's familiar name, but includes labels if they're present; eg. `eth0`, `eth0:00`, `eth0:nfs` are all fine. |

| Line 78: | Line 79: |

| * It does so my prepending to the head of the list | The list is built by prepending each item to the head of the list. As a result, "later" addresses appear at the head of the list. This means that when Corosync goes to traverse the list, it hits them in the reverse order of what a human would tend to expect (the kernel's listing is whatever order `getifaddrs()` returns, which is likely arbitrarily ordered, but probably "sane" as far as we're concerned). |

| Line 80: | Line 81: |

| * As a result, "later" addresses appear at the head of the list | When Corosync searches the linked list for a match, it stops on the first one it finds. Of course our list is backwards, so a newly added address, such as a floating HA IP, is likely to be selected if it's viable. |

| Line 82: | Line 83: |

| * When Corosync goes to traverse the list, it hits them in the reverse order of what a human would tend to expect * NB: the listing from the kernel is ''probably'' in undefined (ie. arbitrary) order? |

This diagram shows an entirely plausible linked list that could be produced by `totemip_getifaddrs()`: |

| Line 85: | Line 85: |

| * Corosync uses the first match it finds | {{attachment:getifaddrs_linked_list.png}} |

| Line 87: | Line 87: |

| * Example of possible linked list {{{ NAME eth1 eth0:mysql eth0:nfs eth0 ADDRESS 10.1.1.1 -> 192.168.0.42 -> 192.168.0.7 -> 192.168.0.1 (backups) (HA floating) (static) (static, should be used for cluster traffic) }}} |

If we've specced `bindnetaddr` as 192.168.1.0, there's now two valid addresses for consideration, and the floating HA address is first in line. This is good - we know what's going on now. We need a solution though, and of course this cluster is due to be handed over yesterday. |

| Line 94: | Line 92: |

| = How the hack-patch avoids this = | = The hack = |

| Line 96: | Line 94: |

| * This is the hack fix: http://packages.engineroom.anchor.net.au/temp/corosync-2.0.0-ignore-ip-aliases.patch * It's a huge hack * Here it is <<SeeSaw(corosync_ignore_ip_aliases, "inlined")>> {{{{#!wiki seesaw corosync_ignore_ip_aliases |

A workaround is simple enough, and it'll let us keep our overlapping subnets. It should be noted that this is very much a workaround - it's not even clear that the behaviour we've seen is a problem, the answer could be as simple as "you shouldn't do that". We've chosen to tackle the problem in two ways, to provide a little defence in depth. Either one should do the job on its own, but it doesn't hurt to be sure. 1. Skip the IP if the name has a colon in it. It's specific to the way we handle IPs, but will probably work for most people. 2. Append to the tail of the list to maintain the expected ordering. The patch is really simple. For brevity, this is the Linux code path, it also applies just the same to the Solaris code further down the file. |

| Line 122: | Line 126: |

| @@ -449,6 +452,9 @@ if (lifreq[i].lifr_addr.ss_family != AF_INET && lifreq[i].lifr_addr.ss_family != AF_INET6) continue ; + if (lifreq[i].lifr_name && strchr(lifreq[i].lifr_name, ':')) + continue ; + if_addr = malloc(sizeof(struct totem_ip_if_address)); if (if_addr == NULL) { goto error_free_ifaddrs; @@ -484,7 +490,7 @@ if_addr->interface_num = lifreq[i].lifr_index; } - list_add(&if_addr->list, addrs); + list_add_tail(&if_addr->list, addrs); } free (lifconf.lifc_buf); |

|

| Line 142: | Line 127: |

| }}}} * '''Skip the IP if the name has a colon''' in it * '''Append to the tail''' of the list, hopefully matching an "expected" ordering |

|

| Line 149: | Line 129: |

| = Why it's necessary = | = Why this sort of workaround works = |

| Line 151: | Line 131: |

| * WP:Netlink is used to interrogate the kernel for addresses | The `getifaddrs()` interface used is pretty limited. The kernel has additional flags to denote Primary and Secondary addresses, which might be useful when selecting a good source address, but they're not available through `getifaddrs()`. As such, Corosync has no way to use additional criteria to filter the results of the query when selecting a source address. |

| Line 153: | Line 133: |

| * The interface/protocol used is old, and doesn't know about primary/secondary/other addresses * This basically means there's '''no way to specify additional criteria for address selection''', or to dodge addresses from selection * In theory Corosync could be patched to use a newer interface/protocol that can retrieve this information from the kernel |

We make a habit of labelling all our addresses, even the floating HA ones. That means it's easy to ignore them by checking for the colon-delimiter in the interface name. Ideally Corosync would have another (smarter) way of getting address information from the kernel, but portability concerns may make this difficult. |

| Line 161: | Line 138: |

| Clearly we've been able to avoid this problem in the past, but it's not the only way. * Use a separate subnet and NIC for cluster traffic so this doesn't happen |

|

| Line 163: | Line 144: |

| * All previous clusters use a '''separate subnet and NIC for cluster traffic''', so this doesn't happen * It's happened this time because cluster traffic is in the same subnet as internal service addresses * We didn't see a point in using a separate subnet in this case * Because we don't put two subnets on the same network segment, so we wouldn't had to configure another NIC on each machine, which means another VLAN between the two - it seemed like overkill |

* Alter the behaviour of `bindnetaddr` such that it will prefer an exact match if it's available, otherwise fall back to the smart selection as usual For now we've opted to make a patch to implement the latter behaviour. That'll cover us for now while await upstream feedback. == Footnotes == 1. Cluster data is enqueued on each node when it's received. When a node receives the token, it processes the multicast data that has queued-up, does whatever it needs to, then passes the token to the next node. 2. The enumeration happens here, both for startup and "refreshing": https://github.com/corosync/corosync/blob/master/exec/totemip.c#L342 |

Tracing unexpected behaviour in Corosync's address selection

We've been looking at some of Corosync's internals recently, spurred on by one of our new HA (highly-available) clusters spitting the dummy during testing. What we found isn't a "bug" per se (we're good at finding those), but a case where the correct behaviour isn't entirely clear.

We thought the findings were worth sharing, and we hope you find them interesting even if you don't run any clusters yourself.

Disclaimer: We'd like to emphasise that this is purely just research so far; we have yet to formalise this and perform further testing so we can ask the right questions of the Corosync devs.

Contents

Observed behaviour

Before signing-off on cluster deployments we run everything through its paces to ensure that it's behaving as expected. This means plenty of failovers and other stress-testing to verify that the cluster handles adverse situations properly.

Our standard clusters comprise two nodes with Corosync+Pacemaker, running a "stack" of managed resources. HA MySQL is a common example is: DRBD, a mounted filesystem, the MySQL daemon and a floating IP address for MySQL.

During routine testing for a new customer we saw the cluster suddenly partition itself and go up in flames. One side was suddenly convinced there were three nodes in the cluster and called in vain for a STONITH response, while the other was convinced that its buddy had been nuked from orbit and attempted to snap up the resources. What was going on!?

It was time to start poring over the logs for evidence. To understand what happened you need to know how Corosync communicates between nodes in the cluster.

A crash-course in Corosync

Linux HA is split into a number of parts that have changed significant over time. At its simplest, you can consider two major components:

A cluster engine that handles synchronisation and messaging; this is Corosync

A cluster resource manager (CRM) that uses the engine to manage services and ensure they're running where they should be; this is Pacemaker

We're only interested in Corosync, specifically its communication layer.

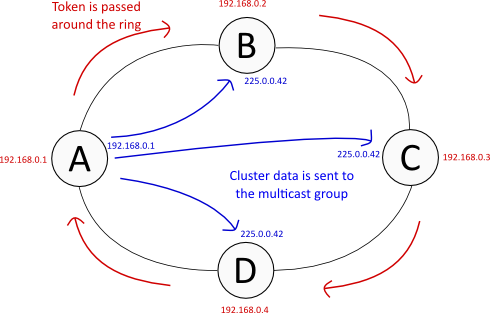

There's two major types of communication in Corosync, the shared cluster data and a "token" which is passed around the ring of cluster nodes (this is a conceptual ring, not a physical network ring). The token is used to manage connectivity and provide synchronisation guarantees necessary for the cluster to operate [1] footnote here.

The token is always transferred by unicast UDP. Cluster data can be sent either by multicast UDP or unicast UDP - we use multicast. In either case, the source address is always a normal unicast address.

Given this, a 4-node cluster looks something like this:

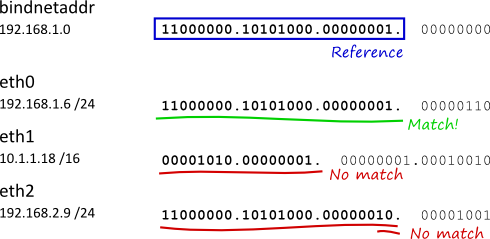

One convenient feature in Corosync is its automatic selection of source address. This is done by comparing the bindnetaddr config directive against all IP addresses on the system and finding a suitable match. The cool thing about this is that you can use the exact same config file for all nodes in your cluster, and everything should Just Work™.

Automatic source-address selection is always used for IPv4, it's not negotiable. It's never done for IPv6, addresses are used exactly as supplied to bindnetaddr.

Interestingly, you only supply an address to bindnetaddr, such as 192.168.0.42 - CIDR notiation is not used, as might be commonly expected when referring to a subnet. Instead, Corosync compares each of the system's addresses (plus the associated netmask) against bindnetaddr, applying the same netmask. This diagram demonstrates a typical setup:

While somewhat contrived, we can see how the local address is determined as intended, compared using each interface's own netmask.

The problem as we see it

The key here is in two parts. Firstly, it's possible for a floating pacemaker-managed IP to match against your bindnetaddr specification. As an example:

- 192.168.0.1/24 - static IP used for cluster traffic

- 192.168.0.42/24 - a floating "service IP" used for an HA daemon

Secondly, Corosync sometimes re-enumerates network addresses [2] footnote here on the host for automatic source address selection. We're not 100% sure of the circumstances under which this occurs, but a classic example would be while performing a rolling upgrade of the cluster software. A normal process would be to unmanage all your resources, stop Pacemaker and Corosync, upgrade, then bring them back up again and remanage your resources.

Taken together, this can lead to the cluster getting very confused when one host's unicast address for cluster traffic suddenly changes. Consider a 2-node cluster comprising nodes A and B:

- A's address changes

- The token drops as a result of the ring breaking

- B thinks A if offline, as A's new address isn't allowed through our outbound firewall rules, so B doesn't receive anything from A

- A also thinks B is offline, because B can't hear A

- In the meantime, A will "discover" a new cluster node: itself, operating on the new address!

This state is passed on to Pacemaker, which attempts to juggle the resources to satisfy its policy. For resources that were already running on A, it now sees duplicate copies, which isn't allowed. Original-A asks for new-A to be STONITH'd. Meanwhile, B is just trying to get all the resources started again.

This is decidedly not ideal. What really got us is that we hadn't seen this behaviour in any previously deployed cluster; something was different.

Normally we'll dedicate a separate subnet to cluster traffic, keeping it away from "production traffic" like load-balanced MySQL or HTTP. This time we didn't, opting to reuse the subnet. We did this because we don't like "polluting" a network segment with multiple IP subnets. We could have setup another VLAN to confine the subnet (avoiding pollution), but it would've meant putting that into our switches just for two physical machines, which seemed like overkill.

Reusing the existing subnet for cluster traffic clearly had something to do with our problem, so it was time to go digging in Corosync to see what it does.

How Corosync can select a different address

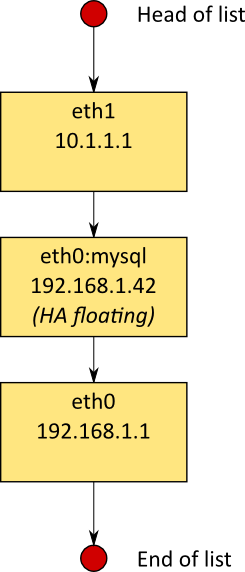

In the Corosync source there's a function called totemip_getifaddrs(), it gets all the addresses from the kernel and puts them in a linked list. For simplicity, you can think of them as tuples of (name,IP). The name will be based on the device's familiar name, but includes labels if they're present; eg. eth0, eth0:00, eth0:nfs are all fine.

The list is built by prepending each item to the head of the list. As a result, "later" addresses appear at the head of the list. This means that when Corosync goes to traverse the list, it hits them in the reverse order of what a human would tend to expect (the kernel's listing is whatever order getifaddrs() returns, which is likely arbitrarily ordered, but probably "sane" as far as we're concerned).

When Corosync searches the linked list for a match, it stops on the first one it finds. Of course our list is backwards, so a newly added address, such as a floating HA IP, is likely to be selected if it's viable.

This diagram shows an entirely plausible linked list that could be produced by totemip_getifaddrs():

If we've specced bindnetaddr as 192.168.1.0, there's now two valid addresses for consideration, and the floating HA address is first in line.

This is good - we know what's going on now. We need a solution though, and of course this cluster is due to be handed over yesterday.

The hack

A workaround is simple enough, and it'll let us keep our overlapping subnets. It should be noted that this is very much a workaround - it's not even clear that the behaviour we've seen is a problem, the answer could be as simple as "you shouldn't do that".

We've chosen to tackle the problem in two ways, to provide a little defence in depth. Either one should do the job on its own, but it doesn't hurt to be sure.

- Skip the IP if the name has a colon in it. It's specific to the way we handle IPs, but will probably work for most people.

- Append to the tail of the list to maintain the expected ordering.

The patch is really simple. For brevity, this is the Linux code path, it also applies just the same to the Solaris code further down the file.

1 diff -ruN corosync-2.0.0.orig/exec/totemip.c corosync-2.0.0/exec/totemip.c

2 --- corosync-2.0.0.orig/exec/totemip.c 2012-04-10 21:09:12.000000000 +1000

3 +++ corosync-2.0.0/exec/totemip.c 2012-05-09 15:03:51.272429481 +1000

4 @@ -358,6 +358,9 @@

5 (ifa->ifa_netmask->sa_family != AF_INET && ifa->ifa_netmask->sa_family != AF_INET6))

6 continue ;

7

8 + if (ifa->ifa_name && strchr(ifa->ifa_name, ':'))

9 + continue ;

10 +

11 if_addr = malloc(sizeof(struct totem_ip_if_address));

12 if (if_addr == NULL) {

13 goto error_free_ifaddrs;

14 @@ -384,7 +387,7 @@

15 goto error_free_addr_name;

16 }

17

18 - list_add(&if_addr->list, addrs);

19 + list_add_tail(&if_addr->list, addrs);

20 }

21

22 freeifaddrs(ifap);

Why this sort of workaround works

The getifaddrs() interface used is pretty limited. The kernel has additional flags to denote Primary and Secondary addresses, which might be useful when selecting a good source address, but they're not available through getifaddrs(). As such, Corosync has no way to use additional criteria to filter the results of the query when selecting a source address.

We make a habit of labelling all our addresses, even the floating HA ones. That means it's easy to ignore them by checking for the colon-delimiter in the interface name. Ideally Corosync would have another (smarter) way of getting address information from the kernel, but portability concerns may make this difficult.

Other ways to dodge this

Clearly we've been able to avoid this problem in the past, but it's not the only way.

- Use a separate subnet and NIC for cluster traffic so this doesn't happen

- Use IPv6

Alter the behaviour of bindnetaddr such that it will prefer an exact match if it's available, otherwise fall back to the smart selection as usual

For now we've opted to make a patch to implement the latter behaviour. That'll cover us for now while await upstream feedback.

Footnotes

1. Cluster data is enqueued on each node when it's received. When a node receives the token, it processes the multicast data that has queued-up, does whatever it needs to, then passes the token to the next node.

2. The enumeration happens here, both for startup and "refreshing": https://github.com/corosync/corosync/blob/master/exec/totemip.c#L342